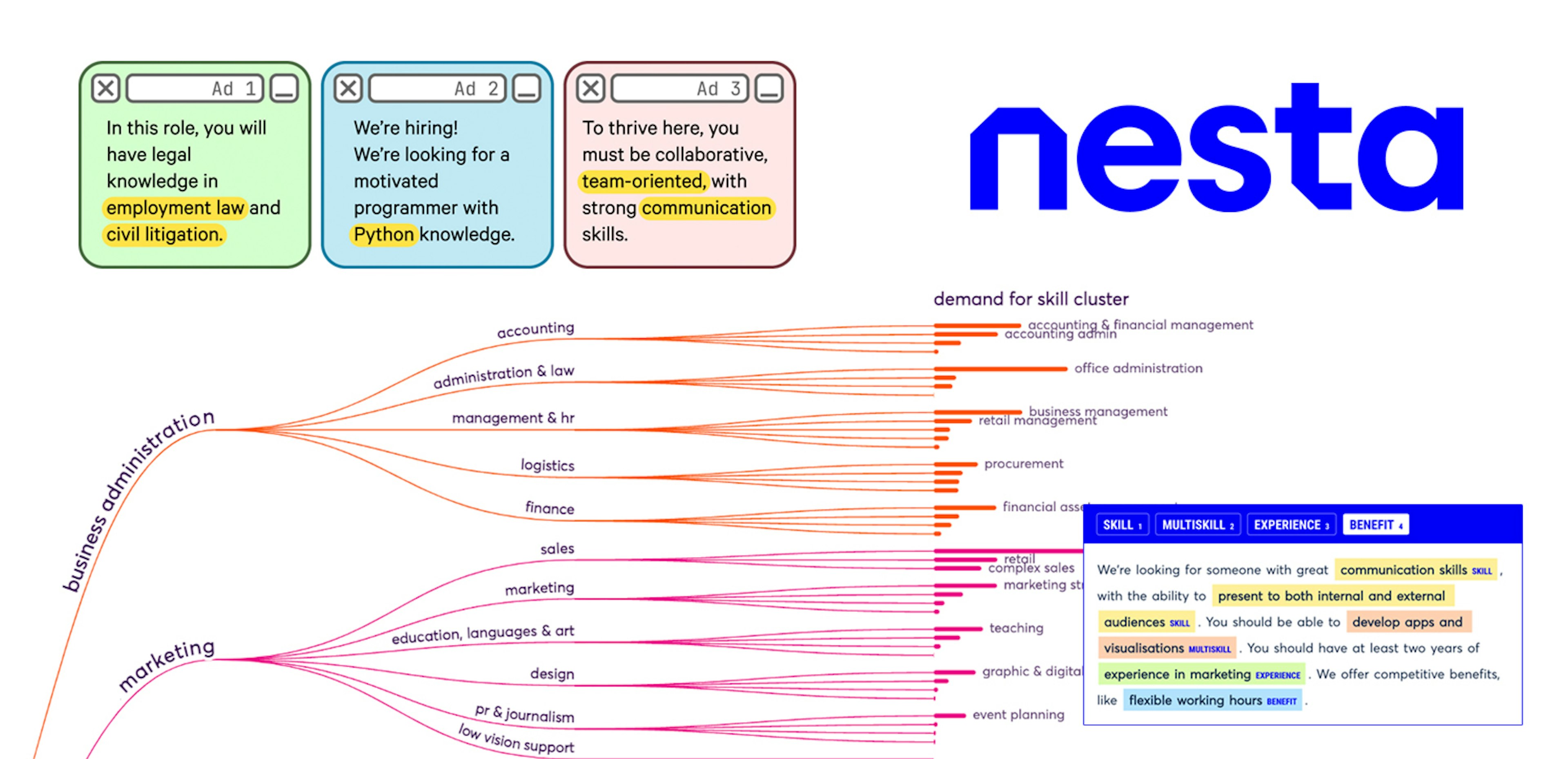

In 2016 we trained a sense2vec model on the 2015 portion of the Reddit comments corpus, leading to a useful library and one of our most popular demos. That work is now due for an update. In this post, we present a new version of the library, new vectors, new evaluation recipes, and a demo NER project that we trained to usable accuracy in just a few hours.

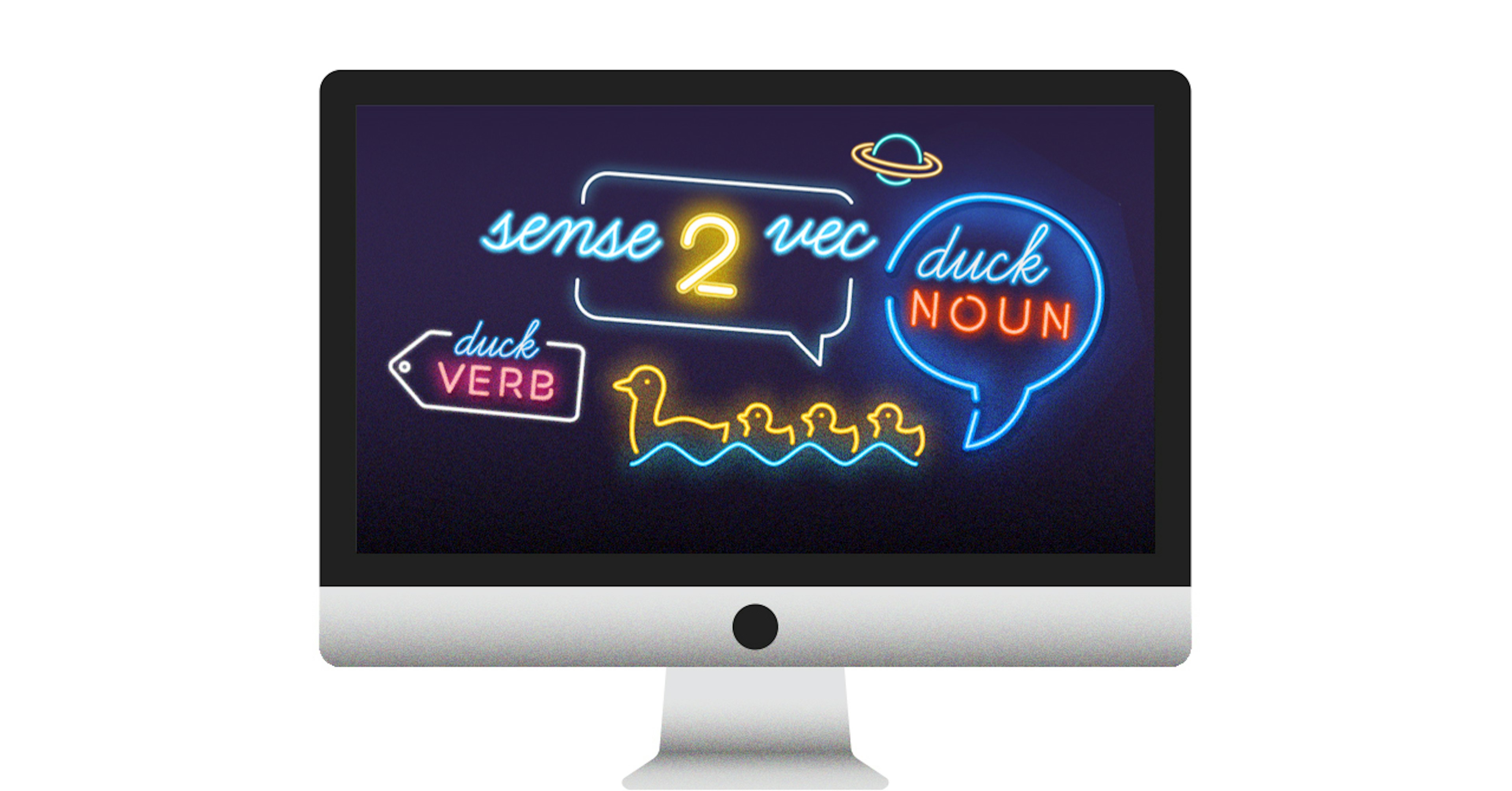

sense2vec (Trask et. al, 2015) is a twist on

the word2vec family of algorithms that lets you learn more interesting word

vectors. Before training the model, the text is preprocessed with linguistic

annotations, to let you learn vectors for more precise concepts.

Part-of-speech tags are particularly helpful: many words have very different

senses depending on their part of speech, so it’s useful to be able to query for

the synonyms of duck|VERB and duck|NOUN separately. Named entity annotations

and noun phrases can also help, by letting you learn vectors for multi-word

expressions.

We’ve loved this idea for a long time, so it’s now time to revisit it and take it a little bit further. First, we trained a new sense2vec model on the 2019 Reddit comments, which makes for an interesting contrast to the previous 2015 vectors. We’ve also updated the interactive demo and given the sense2vec library an overdue update, taking advantage of spaCy v2’s pipeline component and extension attribute systems. Finally, we prepared an end-to-end named entity recognition use-case for the technique, to show sense2vec’s practical applications. We used our annotation tool Prodigy to quickly bootstrap a terminology list using the sense2vec vectors, which we then used to produce a rule-based baseline for a new NER task. We then used Prodigy to quickly annotate training and evaluation data, which we then combine with spaCy’s transfer-learning functionality to train a model that achieves 82.1% accuracy.

Exploring the vectors

We’ve had a lot of fun exploring our previous sense2vec model. The annotations make the results much more informative, especially the results for named entities or niche concepts. Contrasting the results between the 2015 and 2019 models provides an interesting new avenue to explore. Four years is a relatively short time on the scale of language change, but it’s easy to find examples of words, topics and entities that had very different associations in 2015.

| Query | Most similar 2015 | Most similar 2019 |

|---|---|---|

| Billy Ray Cyrus 1 | Madonna, Kurt Cobain, Michael Jackson, Eminem, Tupac | Lil Nas X, Old Town Road, Post Malone, Lil Pump, Miley Cyrus |

| Harvey Weinstein 2 | Martin Scorsese, Adam Sandler, Quentin Tarantino, Johnny Depp | Kevin Spacey, Bill Cosby, R. Kelly, Bryan Singer |

| AOC 3 | BenQ, Monitor, 21.5, 60Hz, monitor-i2269vw, ViewSonic | Alexandria Ocasio-Cortez, Bernie Sanders, Nancy Pelosi, Elizabeth Warren |

| flex 4 | grip, stiffen, lift, tighten | internet muscles, showoff, brag |

| ghost 5 | stealth, insta, ether, blast, teleport | unmatch, mutual fade, flake, friendzone |

Examples like Billy Ray Cyrus show how artists can take on new associations as they reinvent themselves over time. The results also show new word senses, and new public figures such as Alexandria Ocasio-Cortez. Social and political events can also cast public figures in a very different light. Many of the crimes discussed by the #MeToo movement were widely known in 2015, but even figures with several decades of credible accusations were discussed primarily in association with their work, rather than their crimes. The two vector models show several examples where that has changed. It will be interesting to see how the associations for these figures evolve further. Music, films and other art will always prompt new discussion, so the associations may shift back over time.

sense2vec reloaded: the updated library

sense2vec is a Python package to

load and query vectors of words and multi-word phrases based on

part-of-speech tags and entity labels. It can be used as a standalone

library, or as a [GitHub repo](spacy.io/usage/processing-pipelines”, true) spaCy

pipeline component] with convenient custom attributes and methods for accessing

all relevant phrases in a Doc object, or querying vectors and most similar

entries for tokens, noun phrases or entity spans. For more examples and the full

API documentation, see the

GitHub repo.

Standalone usage

Usage as a spaCy component

Evaluating the vectors

Word vectors are often evaluated with a mix of small quantitative test sets, and informal qualitative review. Common test sets include tasks such as analogical reasoning, or human similarity judgments, using a Likert scale. However, established test sets often don’t correspond well to the data being used, or the definition of similarity that the application requires. In practice, most data scientists fall back on the qualitative evaluation: they look at a few example queries, and ship the model if things mostly look okay. While few data scientists would endorse this as best practice, the qualitative evaluation does have important advantages. There are many different definitions of similarity, so whether one model is “better” than another is subjective. However, even if the evaluation is subjective, it’s still good to be a bit more rigorous. With better methodology, you can get more reliable results for the same amount of time spent inspecting the vectors.

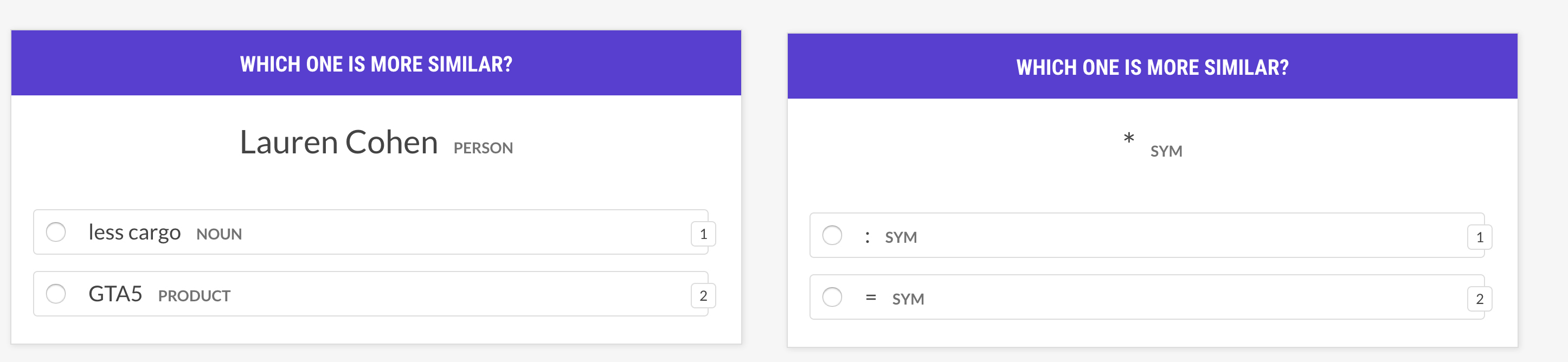

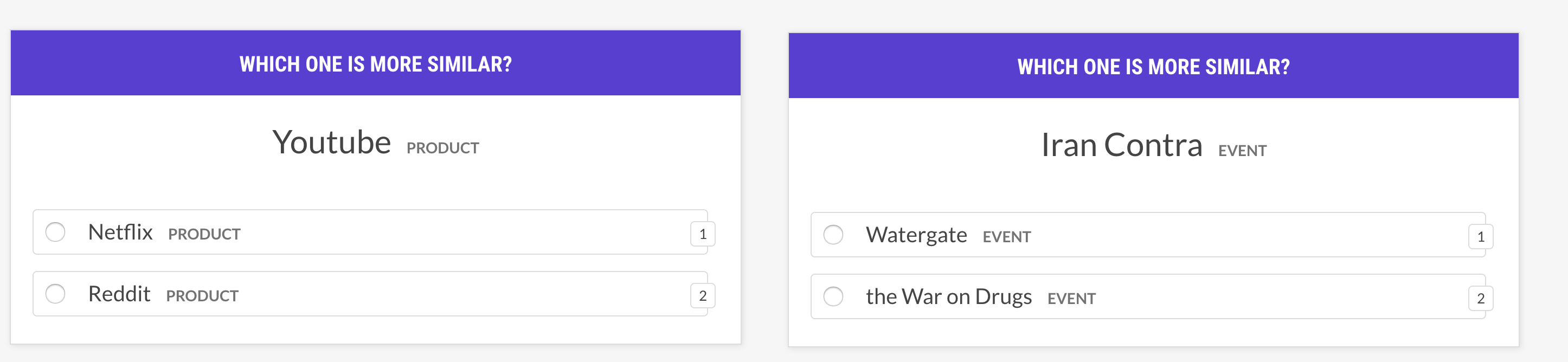

Our evaluation workflows are implemented as Prodigy recipes, but you can also implement your own version as a command line script or using a static file. We initially experimented with an evaluation that would pick three random phrases from the most frequent entries and ask whether A was more similar to B or C. If you mostly agree with the model here, we could conclude that the trained vectors are reasonable and representative. It was a nice idea in theory. However, the lack of constraints and randomness produced some very interesting questions that were funny, but ultimately not very useful:

To solve this, we needed to limit the random selection to entries with the same

sense and limit the included senses so you’re not just evaluating the similarity

of punctuation (is ; more similar to ? or !?) or arbitrary interjections

(is rofl more similar to loool or lmao?). Other strategies we implemented

only select candidates from the most similar phrases of the target phrase or the

first candidate phrase. You can try out the different workflows in Prodigy using

the

sense2vec.eval recipe

with the --strategy option specifying the candidate selection strategy

random, most_similar or most_least_similar.

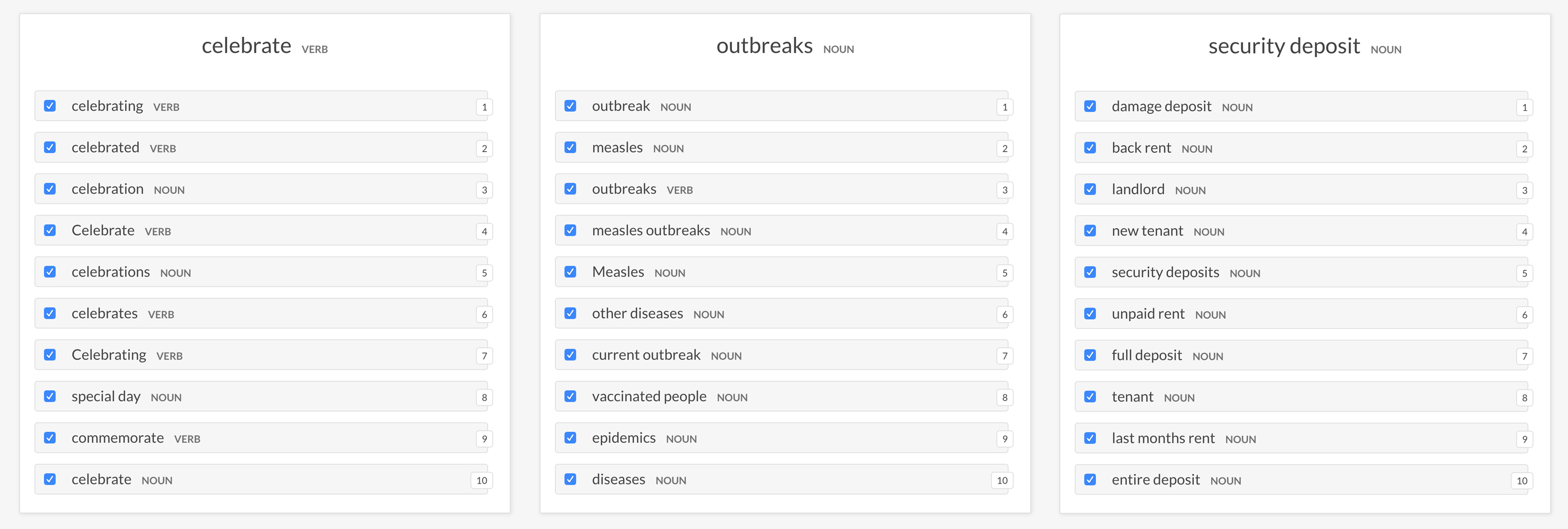

Instead of evaluating similarity triples, you can also opt for a more direct

evaluation strategy. In the

sense2vec.eval-most-similar

recipe, you’re shown a query word and its nearest neighbors. You can then mark

which of the neighbors you consider to be undesirable results. This approach is

especially helpful for lower quality models, where there are some results that

stand out as clearly in error.

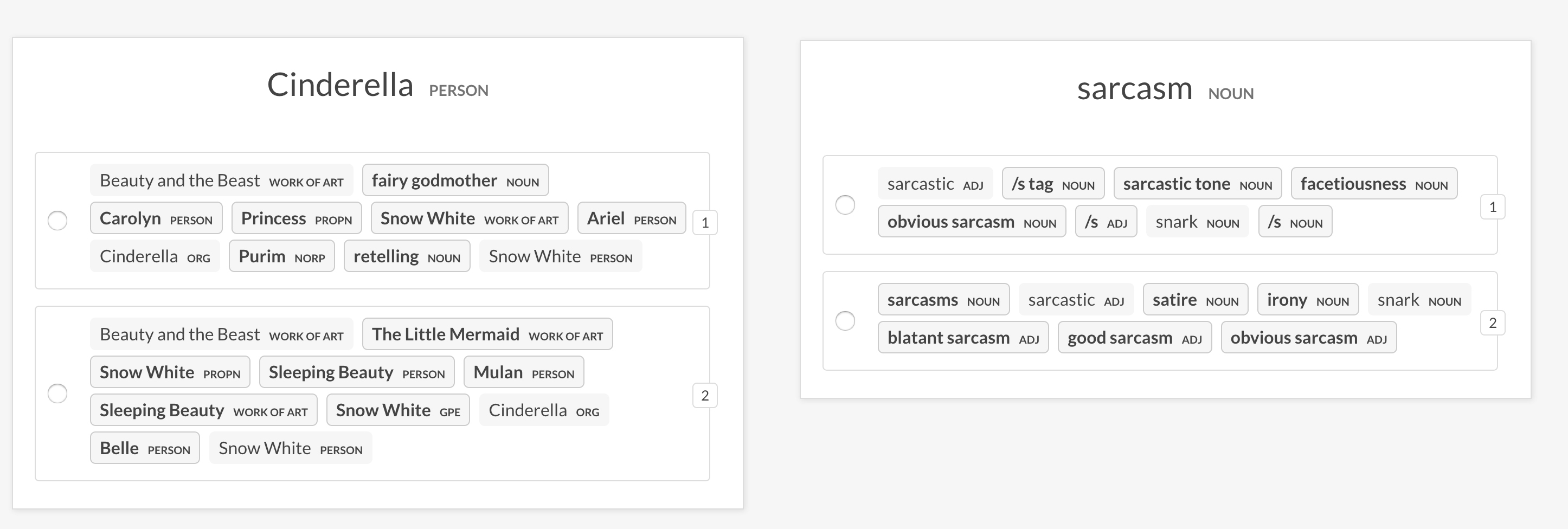

A/B evaluation

The

sense2vec.eval-ab workflow

performs an A/B evaluation of two sense2vec vector models by comparing the most

similar entries they return for a random phrase. The UI shows two randomized

options with the most similar entries of each model and highlights the phrases

that differ. At the end of the annotation session the overall stats and

preferred model are shown. This is sort of like a blind taste-test: you

don’t have to know ahead of time what exactly you’re looking for from the

similarity results. It takes little time to compare two models reliably, and you

or another person can revisit the judgments later to check agreement.

We found the A/B evaluation to be the most useful approach when we were developing the vectors for this post. We used it to compare the 2019 and 2015 vector models, and were able to reach a conclusion after only 20 minutes of evaluation. We found that we preferred the 2019 output in 33 cases, the 2015 output in 17 cases, and were indifferent in an additional 51 cases. We had earlier performed a similar evaluation with a draft vectors model trained only on the January 2019 comments, in which we found we decisively preferred the 2015 outputs. We also ran evaluations to decide the choice of algorithm (skipgram with negative samples vs GloVe, preferring the skipgrams output), the window size (5 vs. 15, preferring the narrower window), and character vs word features (choosing to disable FastText’s character ngrams).

Using sense2vec to bootstrap NER models

Let’s say you want to build an application that can detect fashion brands in online comments. Some brand names, like “adidas” or “Louis Vuitton” are pretty unambiguous. Others, like “New Balance” or “The North Face” are trickier, especially if you can’t rely on proper capitalization or correct spelling. To solve this problem, you could train a named entity recognizer to predict fashion brands in context, using labelled examples for training and evaluation and maybe pretrained representations to initialize the model.

The sense2vec library includes Prodigy workflows to speed up your data collection process with sense2vec vectors. Prodigy is a modern, fully-scriptable annotation tool for creating training data for machine learning models. For this example, we used pretrained sense2vec vectors to bootstrap an NER model. In under 2 hours, we created a dataset of 785 training examples and 500 evaluation examples from scratch and trained a model that achieved 82.1% accuracy on the task.

Download code and data

The pattern files, datasets and training and evaluation scripts created for this blog post are available for download in our new projects repo.

View projectCreating a terminology list and rule-based baseline

Even if your end goal is to train a neural network model, it’s often useful to start off with a rule-based baseline that you can evaluate the model against later. Maybe you’re training a model and are getting an accuracy of 85% on your task. That’s not bad. But if you know that a simple gazetteer or a few regular expressions will get you to 92% accuracy on the same dataset, the model looks much less impressive. You probably wouldn’t want to be shipping that as the only end-to-end solution.

One way to quickly set up a rule-based baseline or proof of concept is to create

a list of some of the most frequent instances of the entities and then match

them in the data. If you have a few examples of the entities you’re looking for,

sense2vec can help you find similar terms. All you have to do is select the

terms you want to keep and update the query vector with the already selected

terms. The

sense2vec.teach

recipe implements this as Prodigy workflow:

prodigy sense2vec.teach s2v_fashion_brands ./s2v_reddit_md --seeds "adidas, Louis Vuitton, BOSS, New Balance"Here’s how it works under the hood. For each seed term, we can call

Sense2vec.get_best_sense to find the best-matching sense with the highest

frequency. Using Sense2vec.most_similar, we can then query the vectors for the

most similar entries compared to the average of the seed vectors:

s2v = Sense2Vec().from_disk("./s2v_reddit_md")seed_keys = [s2v.get_best_sense(seed) for seed in seeds]most_similar = s2v.most_similar(seed_keys, n=10)As we annotate, the answers are sent back to the server and stored in the database. With each new batch of yes/no decisions, we can update our list of query phrases used in the similarity calculation, so the suggestions we see stay consistent with our previous decisions. Once you get into a good rhythm, annotation is super quick – in about 10 minutes, we were able to annotate 145 phrases in total and create a word list of 100 fashion brands.

To extract brand names from our raw data, we can now convert the word list to

match patterns. If you’ve used spaCy’s

sense2vec.to-patterns rule-based

Matcher] before, you’re probably familiar with the syntax: each pattern

consists of a list of dictionaries describing the individual tokens and their

attributes. The

sense2vec.to-patterns

helper converts the previously created dataset to a case-insensitive (or

optionally case-sensitive) JSONL file.

fashion_brand_patterns.jsonl (excerpt)

The EntityRuler is

the most convenient way to use match patterns for rule-based named entity

recognition. It can be loaded from a JSONL and added to the processing pipeline.

The matched spans are then added to the doc.ents.

import spacyfrom spacy.pipeline import EntityRuler

nlp = spacy.blank("en")ruler = EntityRuler(nlp).from_disk("./fashion_brands_patterns.jsonl")nlp.add_pipe(ruler)

doc = nlp("I like Ralph Lauren and UNIQLO.")print([(ent.text, ent.label_) for ent in doc.ents])# [('Ralph Lauren', 'FASHION_BRAND'), ('UNIQLO', 'FASHION_BRAND')]nlp.to_disk("./rule-based-model")Depending on your use case, you might even choose to stop right here, or simply continue to build a larger terminology list. Many entity recognition problems have a fairly limited set of entities, with perhaps a few thousand different names. Gathering these entities as a list is a fine strategy to recognise them in running text – you don’t necessarily need a statistical model. Even if the entities are potentially ambiguous, like “Amazon”, you can often train a text classifier to recognise your domain, and then apply your rule-based entity approach. This is often both cheaper to annotate and more accurate, as you’re basing the statistical decision on a much wider context. However, with social media text like Reddit, rule-based approaches will usually hit a lower accuracy limit. In cases like this, the initial rule-based approach can be used as part of an annotation process, to quickly collect training and evaluation data so you can move forward with a statistical approach.

Bootstrapping annotation with patterns

The entity ruler and its patterns serialize with the nlp object when you call

nlp.to_disk. So you can export and package the model to use it in your

application, or load it into Prodigy’s

ner.make-gold recipe, which

streams in text, pre-highlights the doc.ents and lets you correct them. You

could also use the EntityRuler to pre-label data in spaCy, export it and then

correct it using any other tool or process. Even if your rules only get 50% of

the entities right, that’s still a lot less work for you.

The text in raw_data.jsonl was extracted from Reddit and includes comments

from two popular fashion subreddits,

r/MaleFashionAdvice and

r/FemaleFashionAdvice.

prodigy ner.make-gold ner_fashion_brands ./rule-based-model ./raw-data.jsonl --loader reddit --label FASHION_BRAND --unsegmentedResults

In about 2 hours we worked through 2,516 texts and created 1,735 annotations (1,235 training examples, 500 evaluation examples). As this was a quick project, and we didn’t collect separate development and evaluation sets, we performed only a limited set of experiments, and avoided detailed hyperparameter searches. We’ve limited our experiments to spaCy, but you can use the annotations in any other NER system instead. If you run the experiments, please let us know!

| Accuracy | Words/Sec1 | Data | |||||

|---|---|---|---|---|---|---|---|

| F-Score | Precision | Recall | CPU | GPU | #Ex2 | Work3 | |

| Rule-based baseline | 48.4 | 96.3 | 32.4 | 130k | 130k | 0 | ~15m |

| spaCy NER: blank | 65.7 | 77.3 | 57.1 | 13k | 72k | 1235 | ~2h |

| spaCy NER: vectors4 | 73.4 | 81.5 | 66.8 | 13k | 72k | 1235 | ~2.5h |

| spaCy NER: vectors4 + tok2vec5 | 82.1 | 83.5 | 80.7 | 5k | 68k | 1235 | ~2.5h |

With default settings and no transfer learning, spaCy achieves an F-score of

65.7. On a small dataset like this, we recommend using pretrained word

vectors, and using them in combination with the

spacy pretrain command. spaCy makes both

techniques quite easy to use, and together they improve the F-score to 82.05 on

this data. This matches up well with our general experience: we’ve found that

spacy pretrain usually improves accuracy by about as much as pretrained word

vectors. When the training corpus is large (over one million words), the impact

from both techniques is usually small – less than 1 F-score. But on smaller

datasets, it’s not uncommon to see the 50% error reduction demonstrated here.

The spacy pretrain command implements a cloze-style language modelling

objective, similar to the one introduced by

Devlin et al. (2018). However, we have an

additional innovation to make the objective work well for spaCy’s small

architectures: instead of predicting the exact word ID, we predict the word’s

vector, using a static embeddings table (we use the GloVe vectors for this,

with a cosine loss). We ran spacy pretrain for one epoch on about 1 billion

words of Reddit comments, which completed in roughly 8 hours on a single GTX

2080 Ti. spaCy’s default token-vector encoding settings are a depth 4

convolutional neural network with width 96, and hash embeddings with 2000 rows.

These settings result in a very small model: the trainable weights are only 3.8

MB in total, including the word vectors. To take better advantage of the

pretraining, we increased these slightly, to depth 8, width 128 and 10,000 rows.

The larger model is still quite small: only 17.8 MB. Here’s the exact command we

ran:

spacy pretrain /path/to/data.jsonl en_vectors_web_lg /path/to/output--batch-size 3000 --max-length 256 --depth 8 --embed-rows 10000--width 128 --use-vectors -gpu 0We hope that the resources we’ve presented in this post will be useful to both researchers and practictioners, especially the library and the trained vector models. There’s also a lot further the idea could be taken. We mostly replicated the preprocessing logic from our 2016 post, to keep the comparison simple – but all preprocessing steps can now be easily customized. There are many improvements we’re looking forward to trying out, including more precise detection of non-compositional noun phrases, and handling other types of multi-word expression, especially phrasal verbs. Lemmatization might also be a useful preprocess, especially when we apply the same idea to languages other than English.

GitHub repo sense2vec demo Prodigy