The SpanCategorizer is a spaCy component that answers the NLP community’s need to

have structured annotation for a wide variety of labeled spans, including long

phrases, non-named entities, or overlapping annotations. In this blog post, we’re

excited to talk more about spancat and showcase new features to help with your

span labeling needs!

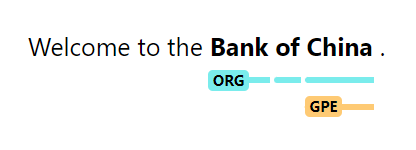

A large portion of the NLP community treats span labeling as an entity extraction problem. However, these two are not the same. Entities typically have clear token boundaries and are comprised of syntactic units like proper nouns. Meanwhile, spans can be arbitrary, often consisting of noun phrases and sentence fragments. Occasionally, these spans even overlap! Take this for example:

The FACTOR label covers a large span of tokens that is unusual in

standard NER. Most ner entities are short and distinguishable, but this

example has long and vague ones. Also, tokens such as “septic,” “shock,” and

“bacteremia” belong to more than one span, rendering them incompatible with

spaCy’s ner component.

The text above is just one of the many examples you’ll find in span labeling. So

during its v3.1 release, spaCy introduced the

SpanCategorizer, a new component that

handles arbitrary and overlapping spans. Unlike the

EntityRecognizer, the spancat

component provides more flexibility in how you tackle your entity extraction

problems. It does this by:

- Explicit control of candidate spans. Users can define rules on how

spancatobtains likely candidates via suggester functions. You can bias your model towards precision or recall through this, customizing it further depending on your use case. - Access to confidence scores. Unlike

ner, aspancatmodel returns predicted label probabilities over the whole span, giving us more meaningful confidences to threshold against. You can use this value to filter your results and tune the performance of your system. - Less edge-sensitivity. Sequence-based NER models typically predict single token-based tags that are very sensitive to boundaries. Although effective for proper nouns and self-contained expressions, it is less useful for other types of phrases or overlapping spans.

How spancat works

From a high-level perspective, we can divide spancat into two parts: the

suggester and the classifier. The suggester is a custom function that

extracts possible span candidates from the text and feeds them into the

classifier. These suggester functions can be completely rule-based, depend

on annotations from other components, or use machine learning approaches.

The suggested spans go into the classifier, which then predicts the correct label for every span. By including the context of the whole span, the classifier can catch informative words deep within it. We can divide the classifier into three steps: Embedding, Pooling, and Scoring.

- Embedding: we obtain the

tok2vecrepresentation of the candidate spans so we can work on them numerically. - Pooling: we reduce the sequences to make the model more robust, then encode the context using a window encoder.

- Scoring: we perform multilabel classification on these pooled spans, thereby returning model predictions and label probabilities.

What’s nice about spancat is that it offers enough flexibility for you to

customize its architecture. For example, you can swap out the

MaxOutWindowEncoder

into a

MishWindowEncoder by

simply updating the config file!

Architecture case study on nested NER

We developed a spaCy

project

that demonstrates how the architectural differences between ner and spancat

led to vastly different approaches to the same problem. We tackle a common use

case in span labeling—nested NER. Here, tokens can be part of

multiple spans simultaneously, owing to the hierarchical or multilabel

nature of that dataset. Take this sentence, for example:

This text came from GENIA, a corpus of biomedical literature lifted from Medline abstracts. It contains five labels—DNA, RNA, cell line, cell type, and protein. Here, the span “Human IL4” is by itself a protein. Additionally, “Human IL4 promoter” is a DNA sequence that binds to that specific protein to initiate a process called transcription. Both spans contain the tokens “Human” and “IL4”, so we should assign multiple labels to both, resulting in nested spans.

Suppose we attempt this problem using the EntityRecognizer. In that case, we have to train one model for each entity type, and then write a custom component that combines them altogether into a coherent set. This approach adds significant complexity as the number of models scales with the number of your labels. It works, but far from ideal:

Another option is to lean into the ner paradigm and preprocess the dataset to

remove its nestedness. This approach might involve rethinking our annotations

(perhaps combining the overlapping span types as a new label or eliminating them

altogether) and turning the nested NER problem into a non-nested entity extraction

one. The resulting model won’t predict nested entities anymore.

With spancat, we can store all spans in a single Doc, and train a single

model for all. In spaCy, we can store these spans inside the Doc.spans

attribute under a specified key. This approach then makes experimentation much

more convenient:

We’re currently working on a more detailed performance comparison using various datasets outside the nested NER use case.

New features

With this blog post, we’re bringing some nice additions to spancat! 🎉

Analyze and debug your spancat datasets

From v3.3.1 onwards, spaCy’s debug data command fully

supports validation and analysis of your spancat datasets. If you have a

spancat component in your pipeline, you’ll receive insights such as the

size of your spans, potential overlaps between training and evaluation sets, and

more!

In addition, debug data provides further analyses of your dataset. For

example, you can determine how long your spans are or how distinct they are

compared to the rest of the corpus. You can then use this information to refine

your spancat configuration further.1

Generate a spancat config with sensible defaults

The spaCy training quickstart allows you to

generate config.cfg files and lets you choose the individual components for

your pipeline with the recommended settings. We’ve updated the configuration for

spancat, giving you more sensible defaults to improve your training!

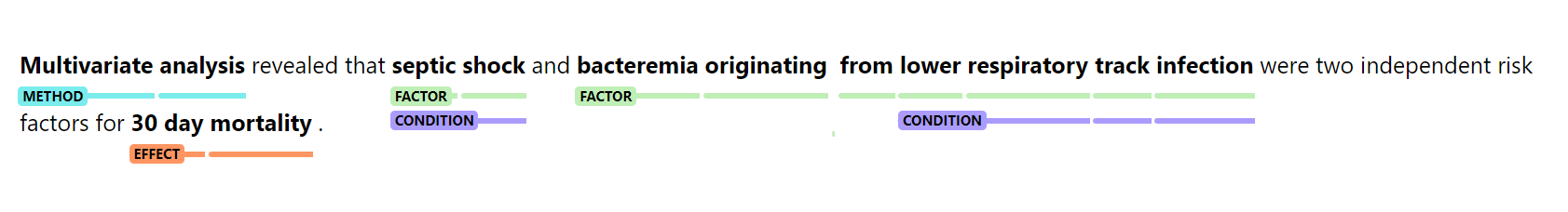

Visualize overlapping spans

We’ve added support for spancat in our visualization library,

displaCy. If your dataset has overlapping

spans or some hierarchical structure, you can use the new "span"

style to display them.

Using displaCy to visualize overlapping spans

Similar to other displaCy styles, you’re free to configure the look and feel of the span annotations. In this example, we’ve color-coded the spans based on their type:

New array of span suggester functions

We’ve also added three new rule-based suggester functions in the 0.5.0 release of our spacy-experimental repository that depend on annotations from other components.

The subtree-suggester uses dependency annotation to suggest tokens with

their syntactic descendants.

The chunk-suggester suggests noun chunks using the noun chunk iterator,

which requires POS and dependency annotation.

The sentence-suggester uses sentence boundaries to suggest sentence spans as

candidates.

These suggesters also come with the functionality to suggest n-grams spans in addition. Try them out in this spaCy project that showcases how to include them in your pipeline!

Learn span boundaries using SpanFinder

The SpanFinder is a new experimental component that identifies span boundaries by tagging potential start and end tokens. It’s an ML approach to suggest fewer but more precise span candidates than the ngram suggester.

When using the ngram suggester, the number of suggested candidates can get high,

slowing down spancat training and increasing its memory usage. We designed the

SpanFinder to produce fewer candidates to solve these problems. In practice,

it makes sense to experiment with different suggester functions to determine

what works best for your specific use-case.

We’ve prepared a spaCy project

that showcases how to use the SpanFinder and how it compares to the ngram

suggester on the GENIA dataset:

Comparison between n-gram and SpanFinder suggesters on GENIA

| Metric | SpanFinder | n-gram (1-10) |

|---|---|---|

| F-score | 0.7122 | 0.7201 |

| Precision | 0.7672 | 0.7595 |

| Recall | 0.6646 | 0.6847 |

| Suggested candidates | 13,754 | 486,903 |

| Actual entities | 5,474 | 5,474 |

| % Ratio | 251% | 8894% |

| % Coverage | 75.61% | 99.53% |

| Speed (tokens/sec) | 10,465 | 4,807 |

Final thoughts

As we encounter several NLP use cases from the community, we recognize that entity extraction is limited in solving the majority of span labeling problems. Spancat is our answer to this challenge. You now have more tools to analyze, train, and visualize your spans with these new features. You can use this as an alternative to NER or as an additional component in your existing pipeline.

You can find out more about span categorization in the spaCy docs, and be sure to check out our experimental suggesters and example projects. We also appreciate community feedback, so we hope to see you in our discussions forum soon!

Footnotes

-

Most of the span characteristics were based on Papay et al.’s work on Dissecting Span Identification Tasks with Performance Prediction (EMNLP 2020). ↩