With all the hype around Generative AI, many are led to believe it’s the solution to everything. So how can you, as a developer or leader, communicate the nuances and advocate for new and modular solutions that are better, easier and cheaper?

The other day I received an interesting question in my LinkedIn comments:

I find in my work it is hard to communicate the cost savings and simplicity of using NLP vs. “AI” (LLMs). The further I get into spaCy the more I realize how many tasks labeled “AI” can be accomplished more cheaply with way less development time. Any advice on how to effectively communicate the differences?

This is a great question, and you’re not alone. For developers, it’s become quite challenging to navigate the field, between exciting new tools you can’t wait to try, tech bros touting the next revolution, and resulting expectations within your organization that may or may not reflect reality and best practices.

It’s not a new phenomenon either. During the first AI and chat bot hype circa 2016, we met many developers who grew increasingly frustrated after their managers excitedly returned from “World of Watson” in Las Vegas (remember the Super Bowl commercial with Mad Men’s Don Draper?). Some of our earliest consulting projects at Explosion centered around helping teams replace AI platforms they bought in with their own NLP pipelines after they realized they could build much better, faster and cheaper systems themselves.

Today, we’re seeing something very similar, just on a larger scale. On the plus side, it’s now much easier to explain what we’re working on and why. Everyone has heard of ChatGPT. You likely won’t have to fight for budgets to work on AI within your organization or need to convince your team that the technology is worth exploring. At the same time, many projects never make it past the prototype stage or have ambitions beyond putting a chat bot in front of it.

Our friends at MantisNLP wrote a great blog post series about their vision for using Generative AI and choosing between open-source tools and proprietary APIs. Their experience matches our thinking:

Quite often however, we find ourselves counseling against using LLMs for a number of use cases because there are cheaper, faster, and better performing alternatives. When all you have is a model like ChatGPT, all problems start to look like LLM problems, even if it is the computational equivalent of hammering a nail with a very costly microscope.

– How we think about Generative AI (MantisNLP)

If you’ve been working in the field for a while, this probably makes sense to you. But how can you communicate it in an environment where “one generative model to rule them all” is widely hailed as the solution to all problems?

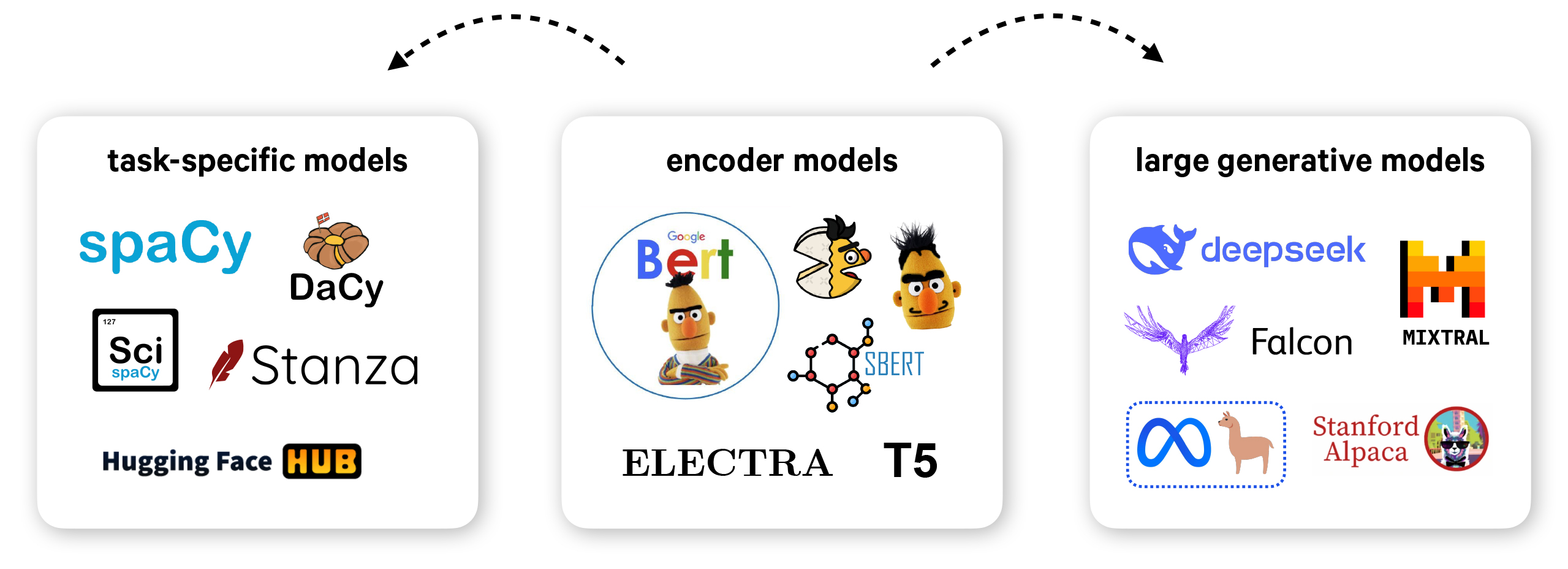

Focus on new workflows

AI has a terminology problem and it’s increasingly unclear what people even mean by it. Everything from task-specific CNN models and BERT embeddings, all the way to generative models and products like ChatGPT, has been called “AI” or “Large Language Model” (LLM) at some point or another. This also means that some parts of the technology may be overlooked in favor of others that feel more approachable, but are often less suited for a particular task.

If you’re talking to stakeholders who are excited about Generative AI, it can easily sound like “We need to use it at all cost!“. Sometimes companies really do just want a prestige project, but more often, the fundamental thing is to deliver business value. They’re asking about Generative AI specifically because they want to know what projects are newly promising. What can we do now that we couldn’t do before?

The answer is that LLMs have a big impact on NLP, especially if you go beyond just text generation at runtime. This isn’t “old NLP” – it’s taking the latest technology a step further. Instead of using large generative models as systems for solving a specific task, you can use them to create systems. This can include writing code or developing rule-based logic, or automating data creation and distilling large models into smaller components that perform the subset of tasks you’re interested in, and do it better.

Put the technology into context

We all interact with systems or processes we wish were in some way “smarter”. But business value isn’t just driven by deploying the most cutting-edge thing that nobody’s done before. It’s about finding the right problem that’s easiest to solve. Generative AI is a good way to get computers to talk to humans, but not a good way to get computers to talk to other computers.

This vision is also very much in line with recent news and developments, which I’m sure decision makers at your organization have been following. Do we really need millions in compute resources and rely on Big Tech companies for our AI models? No. We need to work smarter, not harder. With the right techniques and tooling, it’s totally viable to build AI systems in house.

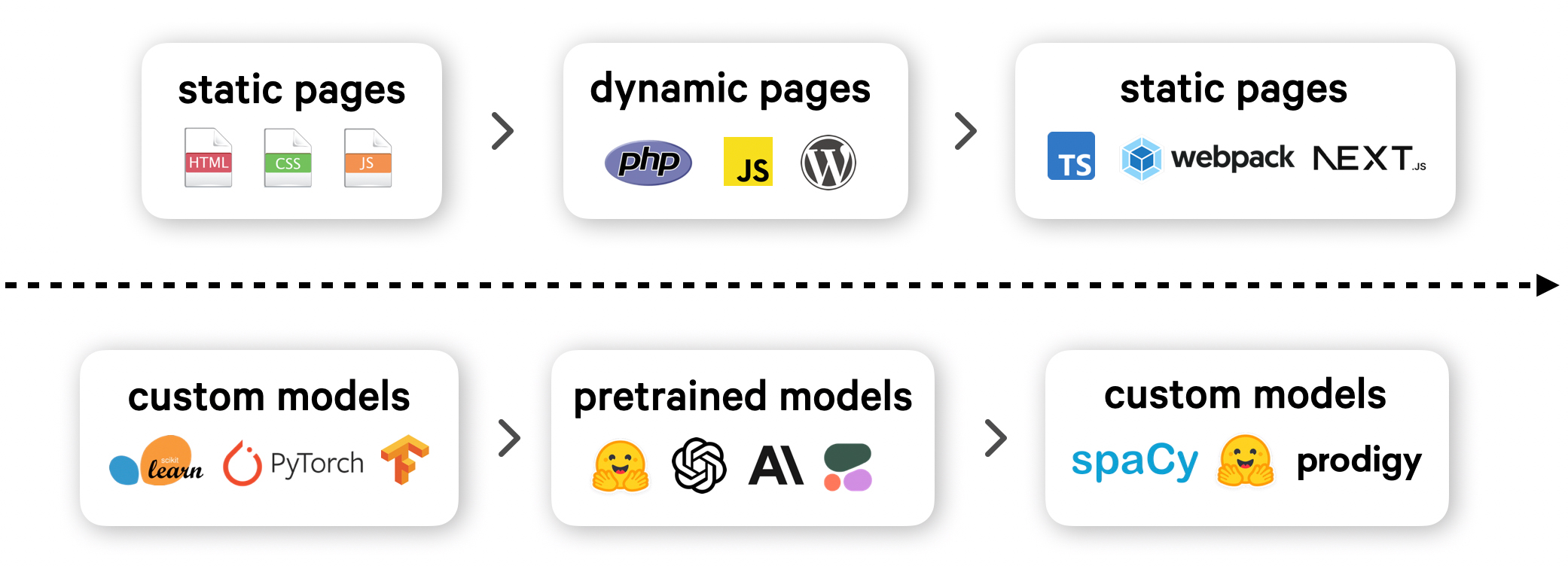

This actually mirrors what we’ve been seeing with another groundbreaking technology: the web. As system requirements are becoming more complex, modern developer tooling and new techniques allowed moving the operational complexity to the development process. Just like the modern JavaScript toolchain automates the build process of static websites and apps, Generative AI can be used during development to write code, create data and distill custom models.

At its core, AI development is simply a different type of software development. Workflows are changing quickly and many new paradigms are emerging – but in the end, there are basic things about technologies that help teams build on them reliably. We’ve learned over time that as an industry, we want our solutions to be:

- modular, so that we can combine a smaller set of primitives we understand in different ways

- transparent, so that we can debug or prevent issues

- explainable, so that we can build the right mental model of how things are working

- data-private, so that internal data doesn’t leave our servers and we can meet legal and regulatory requirements

- reliable, so that we have stable request times with few failures

- affordable, so that it fits within our budget

All of this proves challenging if you’re relying on monolithic black-box models and proprietary APIs. Your job as a developer is to implement solutions that meet requirements like those above, and do it in the best way possible – and you totally can! The result is state-of-the-art AI, but better. Who would say no to that?

Show, don’t tell

When looking at successful NLP projects and teams, including those we profiled in our case studies, an interesting pattern emerges: The most successful projects are the ones where a subject matter expert identified a problem and picked up the tools to solve it most effectively. Even in large organizations, successful projects are typically spearheaded by a single developer or a small team that just did the thing. Modern developer tooling makes even the smallest teams incredibly productive.

For example, Chris at S&P Global previously worked as a commodities trading market reporter and went on to use LLMs to create data for highly accurate, 6mb models for real-time structured trading insights. He was able to quickly build a prototype by himself and his team now runs many different specialized pipelines in production. Jordan at Love Without Sound, a music producer and composer, experienced first-hand how artists were losing millions in royalties due to incorrect music metadata and taught himself programming to build a modular suite of components that tackle the problem with structured NLP.

The biggest bottleneck for custom solutions used to be data creation and annotation. But with transfer learning and tools like Prodigy, this isn’t really the case anymore. It now only takes a few hours of focused work, iterative tooling and the right automation. I often joke that you’ve probably spent more time getting your GPU to work or CUDA installed than it takes to create data for a specialized model these days. If you’re trying to make the case for developing AI in house, the best argument is a working prototype.

Resources

- A practical guide to human-in-the-loop distillation: How to distill LLMs into smaller, faster, private and more accurate components

- What the history of the web can teach us about the future of AI: How developer tooling makes it possible to develop AI features in house

- Against LLM maximalism: LLMs beyond “one model to rule them all”

- Applied NLP Thinking: How to translate business problems into machine learning solutions

- The Window-Knocking Machine Test: What past innovations can teach us about new products to build

- The AI Revolution Will Not Be Monopolized: How open-source beats economies of scale, even for LLMs

- Prodigy: A modern annotation tool for NLP and machine learning

- How we think about Generative AI (MantisNLP): When to use generative models and LLMs

- How we’re thinking about Generative AI: Proprietary vs. Open Source (MantisNLP): How to choose between proprietary models and APIs and open-source solutions

Case studies

- How S&P Global is making markets more transparent: Case study on real-time commodities trading insights using human-in-the-loop distillation

- How Love Without Sound helps the music industry recover millions in revenue for artists: Case study on AI-powered tools for the music industry and law firms specializing in royalty negotiations

- How GitLab analyzes support tickets and empowers their community: Case study on NLP pipelines for extracting actionable insights from support tickets and usage questions